Jane Lanhee Lee of Bloomberg reports on why Nvidia believes its new Blackwell Chip is key to the next stage of AI. Lee writes:

Nvidia Corp. unveiled its most powerful chip architecture at the annual GPU Technology Conference, dubbed Woodstock for AI by some analysts. Chief Executive Officer Jensen Huang took the stage to show off the new Blackwell computing platform, headlined by the B200 chip, a 208-billion-transistor powerhouse that exceeds the performance of Nvidia’s already class-leading AI accelerators. The chip promises to extend Nvidia’s lead on rivals at a time when major businesses and even nations are making AI development a priority. After riding Blackwell’s predecessor, Hopper, to surpass a valuation of more than $2 trillion, Nvidia is setting high expectations with its latest product. You can read all about the Hopper-driven revolution here.

What is Nvidia’s new Blackwell B200 GPU?

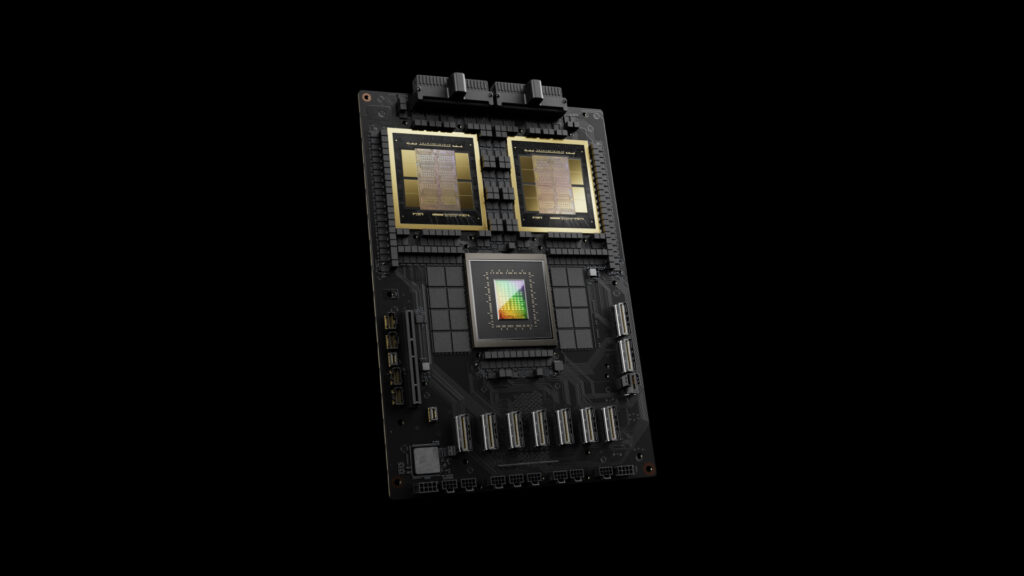

The B200 is Nvidia’s new flagship AI product. It’s at the heart of an expansive ecosystem of products, which includes proprietary interconnect technology that allows Nvidia to sell its chips in massive clusters that function as a unified processor. The Santa Clara, California-based company will sell the B200 in a variety of options, including as part of the GB200 superchip, which combines two Blackwell GPUs, or graphics processing units, with one Grace CPU, or a general-purpose central processing unit.How powerful is Nvidia’s new Blackwell chip?

Blackwell delivers 2.5x Hopper’s performance in training AI, according to Nvidia. Training is the compute-intensive process of feeding data into a model to help it learn and build the intelligence — such as differentiating between a cat and a dog — to serve a useful purpose. That’s been the focus of companies looking to build their in-house AI tools, and it has accounted for the bulk of Nvidia’s AI semiconductor sales to date.Going out into the world to identify cats and dogs is called inference, and Nvidia said Blackwell is five times as fast at that as its previous architecture. Once clustered into modules of dozens of chips, the new product family will offer 25 times greater power efficiency, Nvidia said. That improvement may be a major reason for customers to upgrade to the new chips, as it has the potential to substantially cut operational costs for massive data centers. […]

How will Blackwell measure against competitors?

Advanced Micro Devices Inc. launched its most advanced MI300 chip late last year and is widely seen as Nvidia’s closest rival. Intel Corp., the longtime leader in CPUs, has fallen behind in the AI acceleration race and lacks comparable technology to Nvidia’s current flagship products, let alone its Blackwell upgrades. Nvidia’s customers may, over the course of time, pose the biggest threat to its competitive moat. Google, Amazon and Microsoft each have their own bespoke server processor designs either in use or in development. Ultimately, each of those major cloud computing players would favor building its own semiconductor solution instead of relying on an outside supplier like Nvidia.

Read more here.